Full Digital Marketing and SEO Guide for Women's Clothing

Content

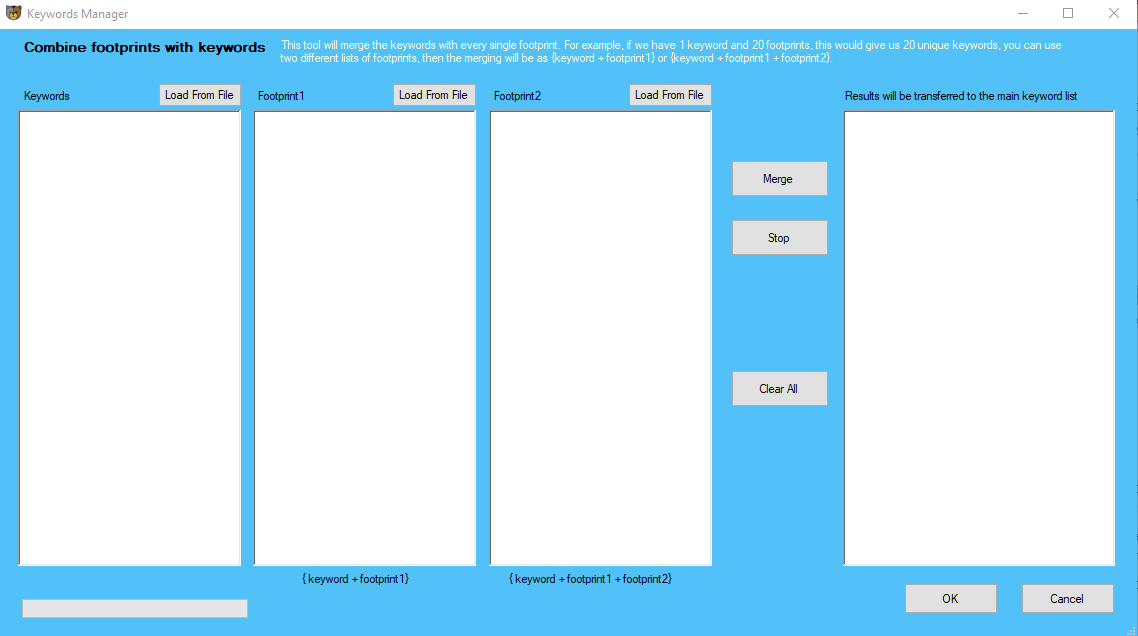

One major setback of Scrapy is that it does not render JavaScript; you have to ship Ajax requests to get knowledge hidden behind JavaScript occasions or use a third-get together software similar to Selenium. The third possibility is to make use of a self-service point-and-click on software, corresponding to Mozenda. Many corporations maintain software program that permits non-technical enterprise customers to scrape websites by constructing tasks utilizing a graphical person interface (GUI). Instead of writing customized code, users merely load an internet page right into a browser and click on to determine data that should be extracted right into a spreadsheet.

Chillax Saturday: strawberry and mint fizzy bubble tea with Coconut CBD tincture from JustCBD @JustCbd https://t.co/s1tfvS5e9y#cbd #cbdoil #cbdlife #justcbd #hemp #bubbletea #tea #saturday #chillax #chillaxing #marijuana #cbdcommunity #cbdflowers #vape #vaping #ejuice pic.twitter.com/xGKdo7OsKd

— Creative Bear Tech (@CreativeBearTec) January 25, 2020

This could be a huge time saver for researchers that rely on entrance-end interfaces on the net to extract information in chunks. Selenium is a different software when in comparison with BeautifulSoup and Scrapy. Finally, the data may be summarized at a better level of element, to point out average prices throughout a category, for instance. To mechanically extract knowledge from web sites, a pc program must be written with the project's specifications. This computer program could be written from scratch in a programming language or can be a set of instructions enter right into a specialized net scraping software program. Web scraping and net crawling discuss with similar however distinct activities.

USA Marijuana Dispensaries B2B Business Data List with Cannabis Dispensary Emailshttps://t.co/YUC0BtTaPi pic.twitter.com/clG0BmdFzd

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Via Selenium’s API, you possibly can really export the underlying code to a Python script, which can later be utilized in your Jupyter Notebook or textual content editor of selection. My little example makes use of the easy performance offered by Selenium for web scraping – rendering HTML that's dynamically generated with Javascript or Ajax. The Selenium-RC (distant-control) tool can management browsers via injecting its own JavaScript code and can be utilized for UI testing. Selenium is an automation testing framework for web applications/websites which might also management the browser to navigate the website identical to a human. However, in addition to all this selenium is useful once we want to scrape knowledge from javascript generated content material from a webpage. That is when the info reveals up after many ajax requests. Nonetheless, each BeautifulSoup and scrapy are completely capable of extracting data from a webpage. The alternative of library boils all the way down to how the data in that particular webpage is rendered. When you call next_button.click(), the actual web browser responds by executing some JavaScript code. I was struggling with my private internet scraping Python based project as a result of I-frames and JavaScript stuff while utilizing Beautiful Soup. I'll positively check out the method that you've got explained. The first choice I needed to make was which browser I was going to inform Selenium to use. As I generally use Chrome, and it’s constructed on the open-source Chromium project (also utilized by Edge, Opera, and Amazon Silk browsers), I figured I would try that first.

Selenium

In below loop, driver.get function requires URL but as we're using hyperlink component x, it's giving me error and asking for URL. ChromeDriver, which needs to be installed before we start scraping. The Selenium internet driver speaks directly to the browser using the browser’s personal engine to regulate it. We can easily program a Python script to automate a web browser utilizing Selenium.

How To Catch An Elementnotvisibleexcpetion

Thus, an internet scraping project may or could not involve internet crawling and vice versa. Selenium is an open source internet testing tool that permits users to test internet purposes throughout completely different browsers and platforms. It includes a plethora of software that developers can use to automate net purposes together with IDE, RC, webdriver and Selenium grid, which all serve different purposes. Moreover, it serves the aim of scraping dynamic internet pages, one thing which Beautiful Soup can’t.

Launching The Webdriver

In the early days, scraping was primarily accomplished on static pages – these with recognized parts, tags, and information. As difficult initiatives go, though, it is a straightforward bundle to deploy in the face of challenging JavaScript and CSS code. The main problem associated with Scrapy is that it's not a beginner-centric device. However, I needed to drop the concept once I discovered it is not newbie-pleasant. When you open the file you get a fully functioning Python script. Selenium is a framework designed to automate tests in your net application. Through Selenium Python API, you can entry all functionalities of Selenium WebDriver intuitively. It provides a convenient method to entry Selenium webdrivers similar to ChromeDriver, Firefox geckodriver, and so on. Because of this, many libraries and frameworks exist to aid in the development of projects, and there is a large group of developers who currently build Python bots.

ash your Hands and Stay Safe during Coronavirus (COVID-19) Pandemic - JustCBD https://t.co/XgTq2H2ag3 @JustCbd pic.twitter.com/4l99HPbq5y

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

With the Selenium Nodes you've the ability of a full-blown browser mixed with KNIME’s processing and information mining capabilities. Your first step, earlier than writing a single line of Python, is to install a Selenium supported WebDriver for your favorite web browser. In what follows, you'll be working with Firefox, but Chrome may easily work too. Beautiful Soup is a Python library built particularly to drag information out of HTML or XML recordsdata. Selenium, then again, is a framework for testing net functions.

With the Selenium Nodes you've the ability of a full-blown browser mixed with KNIME’s processing and information mining capabilities. Your first step, earlier than writing a single line of Python, is to install a Selenium supported WebDriver for your favorite web browser. In what follows, you'll be working with Firefox, but Chrome may easily work too. Beautiful Soup is a Python library built particularly to drag information out of HTML or XML recordsdata. Selenium, then again, is a framework for testing net functions.

Global Hemp Industry Database and CBD Shops B2B Business Data List with Emails https://t.co/nqcFYYyoWl pic.twitter.com/APybGxN9QC

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This makes recruitment of builders easier and also means that assist is easier to get when needed from sites such as Stack Overflow. Besides its reputation, Python has a comparatively easy learning curve, flexibility to perform all kinds of duties simply, and a clear coding fashion. Some net scraping tasks are higher suited towards utilizing a full browser to render pages. This might imply launching a full net browser in the same means a daily user might launch one; net pages which might be loaded on visible on a display. However, visually displaying internet pages is mostly unnecessary when internet scraping results in higher computational overhead. In latest years, there was an explosion of front-end frameworks like Angular, React, and Vue, which have gotten increasingly more well-liked. Webpages which are generated dynamically can provide a faster person experience; the weather on the webpage itself are created and modified dynamically. These websites are of great benefit, but could be problematic once we need to scrape information from them. Selenium is used for web application automated testing. It automates net browsers, and you need to use it to carryout actions in browser environments on your behalf. However, it has since been included into internet scraping.

- These websites are of nice profit, but may be problematic when we need to scrape knowledge from them.

- Webpages which might be generated dynamically can provide a quicker user experience; the elements on the webpage itself are created and modified dynamically.

- In recent years, there has been an explosion of front-end frameworks like Angular, React, and Vue, which are becoming increasingly popular.

Selenium uses a web-driver package deal that may take control of the browser and mimic person-oriented actions to set off desired occasions. This guide will explain the method of constructing an internet scraping program that may scrape data and download information from Google Shopping Insights. To be taught more about scraping advanced websites, please go to the official docs of Python Selenium. Static scraping was adequate to get the record of articles, but as we saw earlier, the Disqus comments are embedded as an iframe element by JavaScript. The Selenium IDE lets you easily examine parts of an internet web page by monitoring your interplay with the browser and providing alternate options you should use in your scraping. It also provides the chance to simply mimic the login experience, which might overcome authentication points with certain websites. Finally, the export feature provides a fast and easy way to deploy your code in any script or notebook you select. This guide has lined only some aspects of Selenium and net scraping. Yet, like many government web sites, it buries the data in drill-down links and tables. This typically requires “finest guess navigation” to search out the specific information you are on the lookout for. I wished to use the general public information provided for the colleges within Kansas in a analysis project. I favor to take away this variable from the equation and use an precise browser internet driver. In this tutorial, you may find out how the content material you see in the browser really gets rendered and the way to go about scraping it when necessary. Selenium can send net requests and likewise comes with a parser. With Selenium, you can pull out information from an HTML document as you do with Javascript DOM API. Scraping the data with Python and saving it as JSON was what I wanted to do to get began. In some circumstances you may favor to use a headless browser, which means no UI is displayed. Theoretically, PhantomJS is just Web Scraping another web driver. But, in follow, individuals reported incompatibility issues the place Selenium works correctly with Chrome or Firefox and generally fails with PhantomJS. It offers us the freedom we have to effectively extract the information and store it in our most popular format for future use. In this text, we’ll learn to use web scraping to extract YouTube video knowledge using Selenium and Python.

The Full Python Code

In order to harvest the feedback, we will need to automate the browser and work together with the DOM interactively. Web crawling and information extraction is a pain, particularly on JavaScript-based websites. First, individual websites may be tough to parse for quite a lot of reasons. Websites may load slowly or intermittently, and their knowledge may be unstructured or discovered within PDF files or pictures. This creates complexity and ambiguity in defining the logic to parse the site. Second, web sites can change without notice and in surprising ways. So, I determined to abandon my traditional methods and have a look at a possible device for browser-primarily based scraping. A main element here, one thing that most blogs and tutorials on Selenium will address, is the WebDriver (pictured here). The WebDriver, should you’re writing this code from scratch, must be imported and assigned along with your browser of selection. In specific, you'll learn how to count Disqus feedback. Our instruments shall be Python and awesome packages like requests, BeautifulSoup, and Selenium. In order to collect this info, you add a technique to the BandLeader class. Checking back in with the browser’s developer tools, you discover Web Scraping FAQ the best HTML parts and attributes to select all the information you want. Also, you only want to get information about the presently taking part in monitor if there music is actually playing on the time. There are fundamental options here (e.g. rename), however this button is important for one reason, to export the code of the test. When this selection is chosen, you can simply select the language (Python in our case) and save it to your project folder. Web scraping initiatives should be arrange in a approach to detect adjustments and then have to be up to date to accurately gather the identical information. Finally, web sites could make use of technologies, corresponding to captchas, specifically designed to make scraping difficult. Depending on the policies of the net scraper, technical workarounds may or may not be employed. The actual extraction of information from websites is usually simply the first step in an internet scraping project. Further steps normally must be taken to wash, remodel, and combination the data earlier than it can be delivered to the top-person or software. Furthermore, tasks generally are run on servers with out displays. Headless browsers are full browsers with no graphical person interface. They require less computing resources and might run on machines without displays. A tradeoff is that they don't behave exactly like full, graphical browsers. For example, a full, graphical Chrome browser can load extensions whereas a headless Chrome browser cannot (supply). {

NOW RELEASED!