Search Engine Scraping

Kick Start your B2B sales with the World's most comprehensive and accurate Sports Nutrition Industry B2B Marketing List.https://t.co/NqCAPQqF2i

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Contact all sports nutrition brands, wholesalers and manufacturers from all over the world in a click of a button. pic.twitter.com/sAKK9UmvPc

Content

Search Engine Scraper

Vitamins and Supplements Manufacturer, Wholesaler and Retailer B2B Marketing Datahttps://t.co/gfsBZQIQbX

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This B2B database contains business contact details of practically all vitamins and food supplements manufacturers, wholesalers and retailers in the world. pic.twitter.com/FB3af8n0jy

The search engines like google and yahoo like google or bing, present us with multiple pages once we type in any query in the search box, and the pages, generated, comprise results that match the key phrases that we had punched in. Speaking of torrent downloading, you aren’t doing something unlawful by searching torrent search engines like google and using torrent clients on your system. But you cross the road should you download a torrent containing copyrighted content material. In reality, piracy is the explanation BitTorrent has blown up a lot in measurement. Just like other web sites on this listing, Bitcq also doesn’t store any content material on its website.

Search Engine Harvester

You can search two different queries by separating them utilizing the ‘|’ (pipe) operator. Fossbytes is publishing this record only for educational purposes.

Search Engine Harvester Tutorial

For instance, websites with giant amounts of content corresponding to airlines, client electronics, department stores, and so forth. could be routinely targeted by their competition just to remain abreast of pricing information. Compunect scraping sourcecode - A vary of well known open supply PHP scraping scripts together with a frequently maintained Google Search scraper for scraping commercials and natural resultpages. The extra keywords a person must scrape and the smaller the time for the job the more difficult scraping shall be and the more developed a scraping script or device must be.

Search Engine Scraping

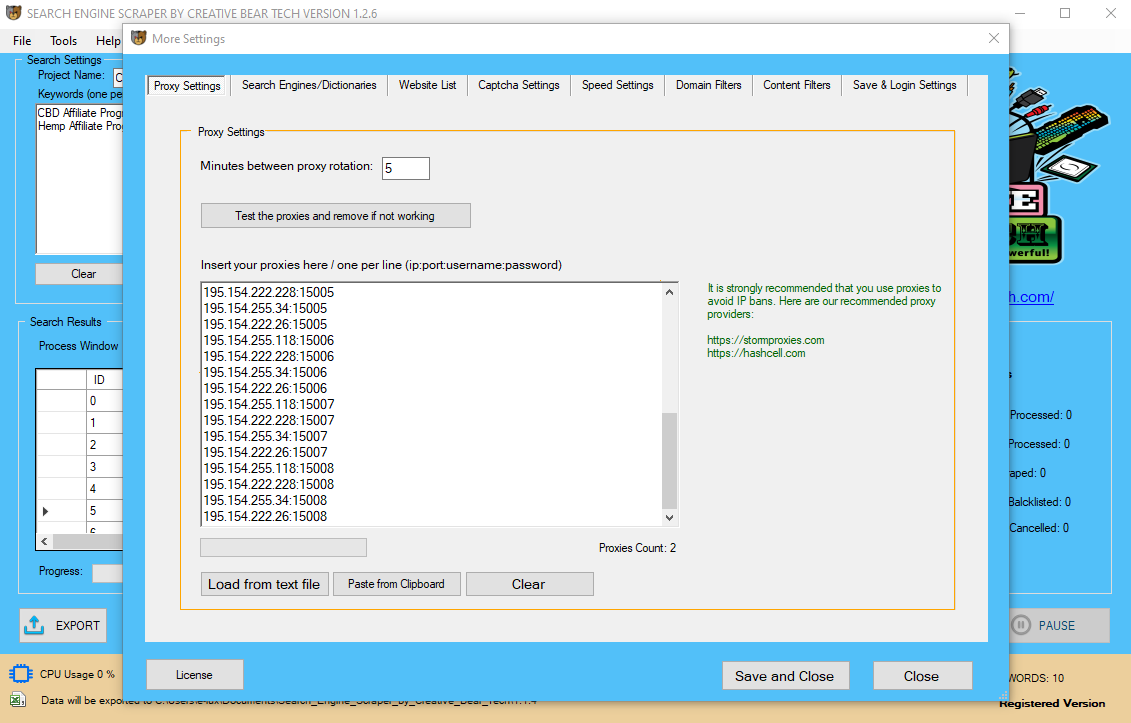

Network and IP limitations are as properly a part of the scraping defense methods. Search engines can not simply be tricked by changing to another IP, while utilizing proxies is an important half in successful scraping. The range and abusive historical past of an IP is essential as properly. Google is the by far largest search engine with most users in numbers as well as most income in creative commercials, this makes Google the most important search engine to scrape for web optimization associated firms. The strategy of getting into a web site and extracting data in an automatic fashion can be typically known as "crawling".

Contents

Just like different alternate options you find on this listing, Xtorx additionally options only a search bar on its homepage. You just have to click on the blue hyperlinks to obtain the torrent recordsdata safely in a single go.

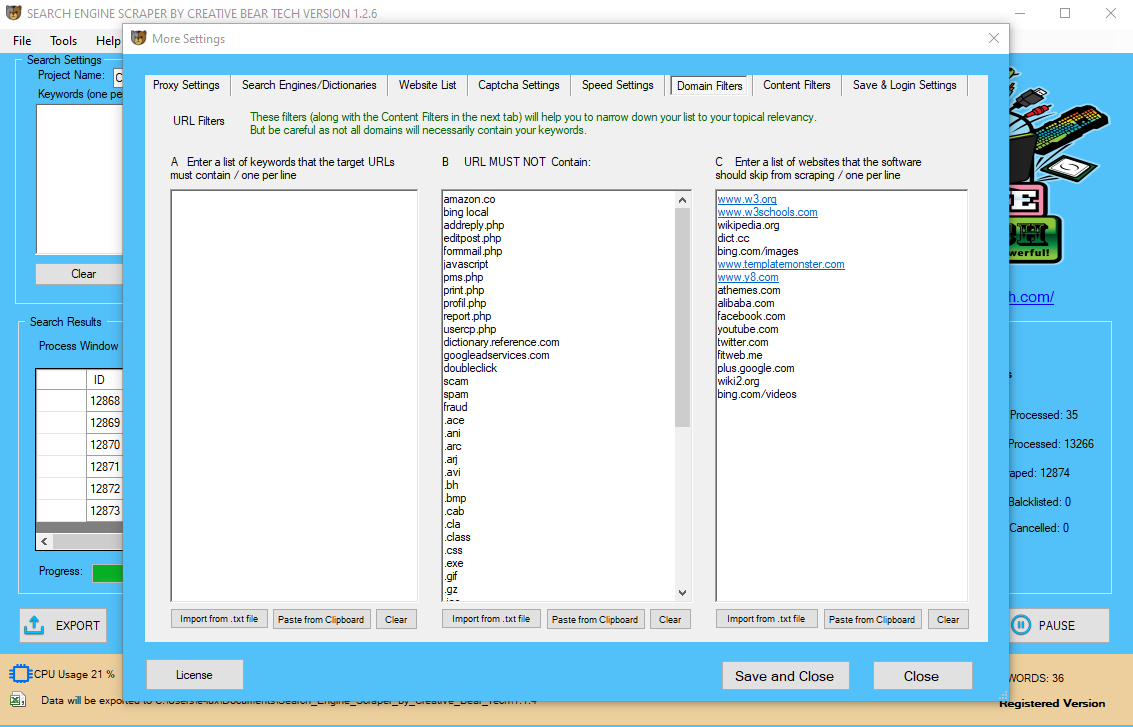

Methods Of Scraping Google, Bing Or Yahoo

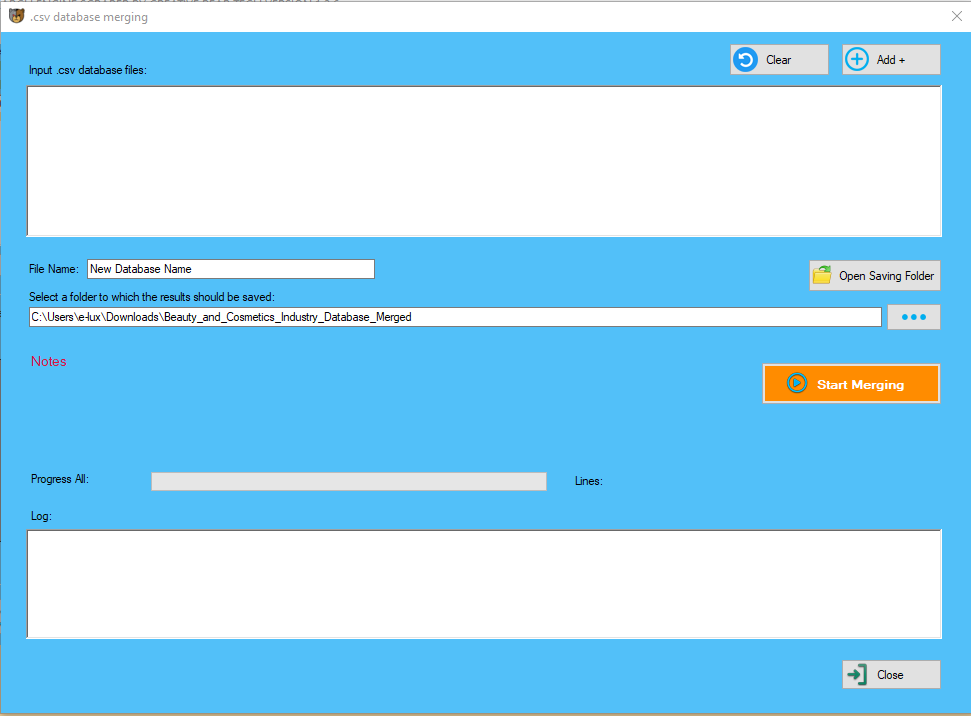

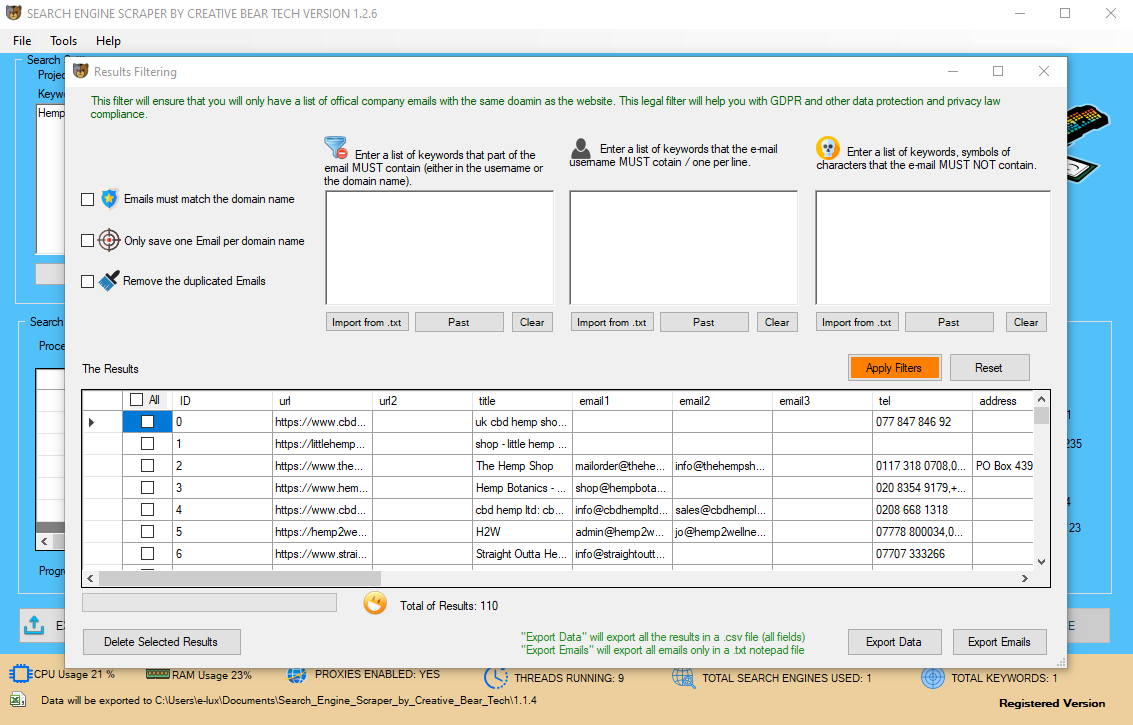

“Enter a list of key phrases that the e-mail username should contain” – right here our goal is to increase the relevancy of our emails and cut back spam on the similar time. “Only Save One Email per Domain Name” – some domains / websites have a few e-mails, one for customer support, one for advertising, one for returns and so on. You will need to go to “More Settings” on the primary GUI and navigate to the tab titled “Website List“. Depending upon the target of a scraper, the methods in which websites are targeted differ. Google did not describe what criteria they're using when choosing who gets to take part within the free beta program. According to the free trial signal-up web page, web publishers will at minimum want an email tackle. There can also be a space for offering a Google Cloud Project Number and a reCAPTCHA v3 key, however they aren't required. A scraper is an automated bot that downloads a web site’s content. Generally the purpose is to republish the content on a spam website. There’s additionally a .onion link to the website that ensures entry even whenever you’re browsing on the Tor network. The USP of this BitTorrent search engine is the advanced search choices supplied; for example, you get instant ideas as soon as you begin typing your question. Google does have a DMCA system that folks can use to take away infringing content material, however that may be a time-consuming process. Potentially, this permits Google’s spam group to move in opposition to infringing content by considering it a spam offense, rather than a copyright concern. In a Nutshell, these scraping instruments make information extraction easier and extra efficient, be it any type of scraping. This happens due to changes in location, settings, shopping history, and so forth. Also, the search engine makers continually perform completely different experiments on their search engines like google to give users enhanced and extra responsive expertise.  This was when content material penalties were launched, used primarily to eliminate scraper websites that may rip content from one website and put it on their very own. This follow was frowned upon significantly as a result of, typically, these scraper web sites would rank higher for that content material than the original web site or creator. On the main GUI, on the prime left hand aspect, just under "Search Settings", you will notice a area called "Project Name". This name shall be used to create a folder the place your scraped knowledge shall be saved and also will be used as the name of the file. For instance, if I am scraping cryptocurrency and blockchain knowledge, I would have a project name alongside the traces of "Cryptocurrency and Blockchain Database". You can even export engine information to share with friends or work faculties who personal ScrapeBox too. daily news and insights about search engine advertising, SEO and paid search. Get the Latest day by day news and insights about search engine advertising, SEO and paid search. "Remove the Duplicated Emails" - by default, the scraper will take away all of the duplicate emails. You can get your torrent search outcomes instantly, however sadly there is no way to filter them out. But that isn’t needed as a result of Xtorx offers search URLs for other torrent websites. That means clicking any of the outcomes would open a new search on one other torrent site. When trying to find your favorite torrents, one other great torrent search web site exists by the name of Xtorx. The largest public identified incident of a search engine being scraped occurred in 2011 when Microsoft was caught scraping unknown keywords from Google for their own, quite new Bing service. When creating a search engine scraper there are a number of present instruments and libraries out there that may either be used, prolonged or just analyzed to study from. The customized scraper comes with approximately 30 search engines already trained, so to get began you merely must plug in your keywords and start it running or use the included Keyword Scraper. This method they hope to rank extremely in the search engine outcomes pages (SERPs), piggybacking on the original page's web page rank. Some scraper sites link to other sites to improve their search engine ranking via a non-public weblog network. Prior to Google's replace to its search algorithm known as Panda, a sort of scraper website often known as an auto weblog was fairly common amongst black hat marketers who used a technique known as spamdexing. Made for AdSense sites are thought of search engine spam that dilute the search results with less-than-satisfactory search outcomes. The scraped content material is redundant to that which might be shown by the search engine underneath normal circumstances, had no MFA website been found in the listings. HTML markup changes, depending on the strategies used to harvest the content material of a website even a small change in HTML data can render a scraping device broken until it was up to date. You can add nation primarily based search engines like google and yahoo, and even create a customized engine for a WordPress web site with a search box to harvest all the publish URL’s from the website. Trainable harvester with over 30 search engines like google and yahoo and the ability to easily add your personal search engines like google to harvest from just about any website.

This was when content material penalties were launched, used primarily to eliminate scraper websites that may rip content from one website and put it on their very own. This follow was frowned upon significantly as a result of, typically, these scraper web sites would rank higher for that content material than the original web site or creator. On the main GUI, on the prime left hand aspect, just under "Search Settings", you will notice a area called "Project Name". This name shall be used to create a folder the place your scraped knowledge shall be saved and also will be used as the name of the file. For instance, if I am scraping cryptocurrency and blockchain knowledge, I would have a project name alongside the traces of "Cryptocurrency and Blockchain Database". You can even export engine information to share with friends or work faculties who personal ScrapeBox too. daily news and insights about search engine advertising, SEO and paid search. Get the Latest day by day news and insights about search engine advertising, SEO and paid search. "Remove the Duplicated Emails" - by default, the scraper will take away all of the duplicate emails. You can get your torrent search outcomes instantly, however sadly there is no way to filter them out. But that isn’t needed as a result of Xtorx offers search URLs for other torrent websites. That means clicking any of the outcomes would open a new search on one other torrent site. When trying to find your favorite torrents, one other great torrent search web site exists by the name of Xtorx. The largest public identified incident of a search engine being scraped occurred in 2011 when Microsoft was caught scraping unknown keywords from Google for their own, quite new Bing service. When creating a search engine scraper there are a number of present instruments and libraries out there that may either be used, prolonged or just analyzed to study from. The customized scraper comes with approximately 30 search engines already trained, so to get began you merely must plug in your keywords and start it running or use the included Keyword Scraper. This method they hope to rank extremely in the search engine outcomes pages (SERPs), piggybacking on the original page's web page rank. Some scraper sites link to other sites to improve their search engine ranking via a non-public weblog network. Prior to Google's replace to its search algorithm known as Panda, a sort of scraper website often known as an auto weblog was fairly common amongst black hat marketers who used a technique known as spamdexing. Made for AdSense sites are thought of search engine spam that dilute the search results with less-than-satisfactory search outcomes. The scraped content material is redundant to that which might be shown by the search engine underneath normal circumstances, had no MFA website been found in the listings. HTML markup changes, depending on the strategies used to harvest the content material of a website even a small change in HTML data can render a scraping device broken until it was up to date. You can add nation primarily based search engines like google and yahoo, and even create a customized engine for a WordPress web site with a search box to harvest all the publish URL’s from the website. Trainable harvester with over 30 search engines like google and yahoo and the ability to easily add your personal search engines like google to harvest from just about any website.  Doing so will enable SEOs to make the most of the already-established backlinks to the domain name. Some spammers may attempt to match the topic of the expired site or copy the prevailing content from the Internet Archive to keep up the authenticity of the positioning so that the backlinks don't drop. For example, an expired website a few photographer may be re-registered to create a website about pictures suggestions or use the domain name of their personal weblog community to energy their very own photography web site. Another sort of scraper will pull snippets and text from web sites that rank excessive for keywords they've targeted. There’s even an engine for YouTube to harvest YouTube video URL’s and Alexa Topsites to reap domains with the very best visitors rankings. "Enter an inventory of keywords that the e-mail username should contain" - here our aim is to extend the relevancy of our emails and cut back spam at the identical time. For instance, I may want to contact all emails starting with data, hello, sayhi, and so forth. "Enter an inventory of key phrases that part of the email must include (either in the username or the domain name" - this must be your list of key phrases that you just would like to see within the e-mail. Essentially, the very cause that publishers initially needed to be listed in SERPs is being circumvented. The content material taken from other sites is changing into more outstanding as Google will increase the amount of internet definitions (similar to the example above), direct answers and Knowledge Graph solutions it provides in their search results. Crawling would be primarily what Google, Yahoo, MSN, and so on. do, on the lookout for ANY info. “Remove the Duplicated Emails” – by default, the scraper will take away all of the duplicate emails. On the main GUI, at the top left hand aspect, slightly below “Search Settings”, you will see a subject referred to as “Project Name“. For instance, if I am scraping cryptocurrency and blockchain information, I would have a project name along the traces of “Cryptocurrency and Blockchain Database“. Then go to folder “1.1.1” right click on it and select “Properties”. Then, you will need to uncheck the field “Read-solely” and click on on “Apply”. You might want to go to "More Settings" on the main GUI and navigate to the tab titled "Website List". Make sure that your list of websites is saved locally in a .txt notepad file with one url per line (no separators). Select your web site listing source by specifying the location of the file.

Doing so will enable SEOs to make the most of the already-established backlinks to the domain name. Some spammers may attempt to match the topic of the expired site or copy the prevailing content from the Internet Archive to keep up the authenticity of the positioning so that the backlinks don't drop. For example, an expired website a few photographer may be re-registered to create a website about pictures suggestions or use the domain name of their personal weblog community to energy their very own photography web site. Another sort of scraper will pull snippets and text from web sites that rank excessive for keywords they've targeted. There’s even an engine for YouTube to harvest YouTube video URL’s and Alexa Topsites to reap domains with the very best visitors rankings. "Enter an inventory of keywords that the e-mail username should contain" - here our aim is to extend the relevancy of our emails and cut back spam at the identical time. For instance, I may want to contact all emails starting with data, hello, sayhi, and so forth. "Enter an inventory of key phrases that part of the email must include (either in the username or the domain name" - this must be your list of key phrases that you just would like to see within the e-mail. Essentially, the very cause that publishers initially needed to be listed in SERPs is being circumvented. The content material taken from other sites is changing into more outstanding as Google will increase the amount of internet definitions (similar to the example above), direct answers and Knowledge Graph solutions it provides in their search results. Crawling would be primarily what Google, Yahoo, MSN, and so on. do, on the lookout for ANY info. “Remove the Duplicated Emails” – by default, the scraper will take away all of the duplicate emails. On the main GUI, at the top left hand aspect, slightly below “Search Settings”, you will see a subject referred to as “Project Name“. For instance, if I am scraping cryptocurrency and blockchain information, I would have a project name along the traces of “Cryptocurrency and Blockchain Database“. Then go to folder “1.1.1” right click on it and select “Properties”. Then, you will need to uncheck the field “Read-solely” and click on on “Apply”. You might want to go to "More Settings" on the main GUI and navigate to the tab titled "Website List". Make sure that your list of websites is saved locally in a .txt notepad file with one url per line (no separators). Select your web site listing source by specifying the location of the file.

- This means how many keywords you would like to process at the identical time per website/source.

- You ought to actually solely be using the "built-in net browser" if you're using a VPN corresponding to Nord VPN or Hide my Ass VPN (HMA VPN).

- So, the software would concurrently scrape Google for two key phrases, Bing for two key phrases and Google Maps for two keywords.

- For example, if I select 3 sub scrapers and a couple of threads per scraper, this would imply that the software program would scrape Google, Bing and Google Maps at 2 key phrases per web site.

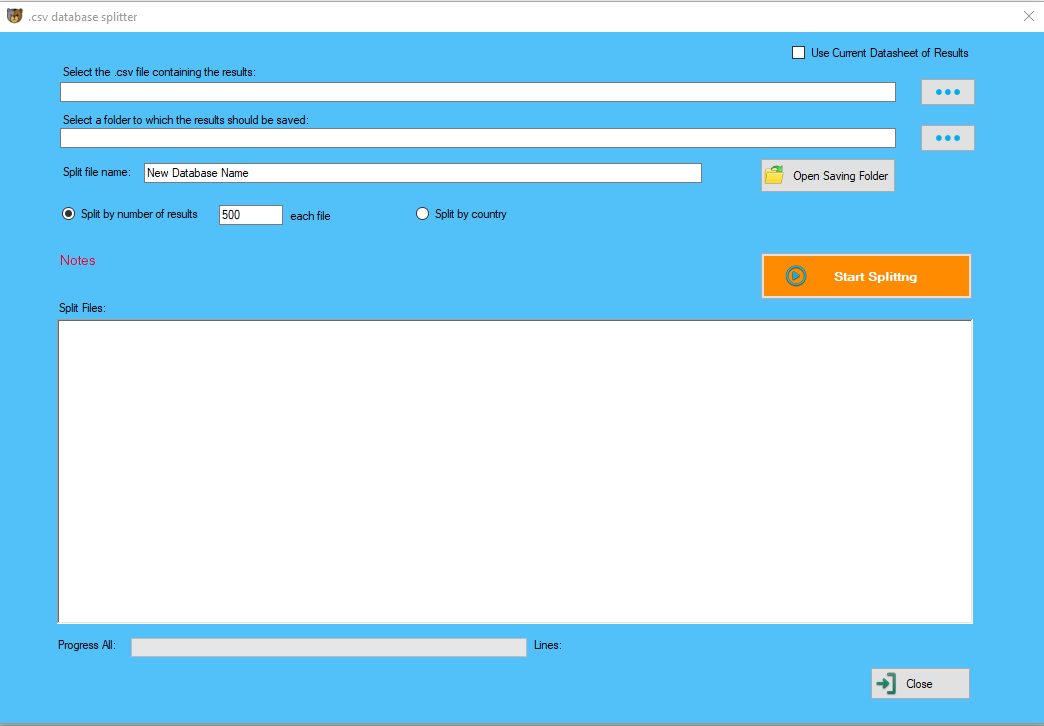

Essentially, if scrapers can outrank Econsultancy and ClickZ, this means both sites have an issue. "Email Must match Domain" - this can be a filter to filter out all of the generic and non-firm emails such as gmail, yandex, mail.ru, yahoo, protonmail, aol, virginmedia and so forth. Amid the rising scrutiny, many torrent websites have started calling themselves a search engine for torrents, saying they solely provide a means for people to look torrents. It’s a category of torrent websites that don’t host any torrent files however offers a method for the customers to find torrents on different torrent sites. Scroogle Scraper enables you to benefit from Google's search engine without compromising your privacy or allowing your searching and searching habits to be recorded. Scraper API is a device designed for developers who want to scrape the online at scale without having to fret about getting blocked or banned. It handles proxies, user brokers, CAPTCHAs and anti-bots so that you don’t have to. Simply ship a URL to their API endpoint or their proxy port and they deal with the rest. Making it an excellent option for companies who wish to affordably mine Google SERP outcomes for search engine optimization and market analysis insights. “[Icon of a Magic Wand] [check box] Automatically generate key phrases by getting related keyword searches from the various search engines”. Add public proxies scraper device, auto-examine and confirm the public proxies, mechanically remove non-working proxies and scrape new proxies each X number of minutes. Sometimes, the website scraper will attempt to save a file from a web site onto your local disk. Our devs are looking for a solution to get the website scraper to mechanically close the windows. With plans starting from $29 for 250,000 Google pages, to Enterprise Plans for hundreds of millions of Google pages per month, Scraper API has an choice for each finances size. That is why on this guide, we’re going to break down the 7 greatest Google proxy, API and scraping instruments that make getting the SERP information you need easy. He is at all times experimenting with new formats and in search of inventive ways to produce, optimize and promote content material. He previously wrote for CanadaOne Magazine and helped create and implement on-line marketing methods at Mongrel Media. A lot of web site house owners put their private emails on the website and social media. This filter is particularly useful for complying with the GDPR and comparable information and privacy legal guidelines. There’s a slight negative in that doubtlessly, somebody reported for spamming as a “scraper” might have a valid copyright declare. One of essentially the most irritating experiences for any writer is discovering that someone not only has copied your content however outranks you on Google for searches associated to that content material. You even have the choice to type the results by date and relevance. This in style meta torrent search engine site could be very completely different from some other BitTorrent-associated website you’ll see. So if you get emails telling you that a POF.com member wants to fulfill you, if you click on the link within the e-mail to view their profile you're directed to a webpage to pay for a POF upgraded membership. We’ve just talked via 7 of the top APIs and proxy options for Google search engine outcomes. There are many extra, but these seven are the most effective of the most effective and ought to be your first choice when on the lookout for an answer to your SERP knowledge wants.  I suggest to split your grasp list of internet sites into files of a hundred web sites per file. The purpose why it is important to cut up up larger files is to permit the software program to run at a number of threads and course Website Data Extraction Software of all the web sites a lot quicker. The Google Scraper Report type doesn’t promise any instant fix — or any fix in any respect. Rather, it simply asks individuals to share their authentic content URL, the URL of the content taken from them and the search results that triggered the outranking.

I suggest to split your grasp list of internet sites into files of a hundred web sites per file. The purpose why it is important to cut up up larger files is to permit the software program to run at a number of threads and course Website Data Extraction Software of all the web sites a lot quicker. The Google Scraper Report type doesn’t promise any instant fix — or any fix in any respect. Rather, it simply asks individuals to share their authentic content URL, the URL of the content taken from them and the search results that triggered the outranking.

Pet Stores Email Address List & Direct Mailing Databasehttps://t.co/mBOUFkDTbE

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Pet Care Industry Email List is ideal for all forms of B2B marketing, including telesales, email and newsletters, social media campaigns and direct mail. pic.twitter.com/hIrQCQEX0b

To outline it in simple phrases, SERPs are principally responses given by search engines like google whenever you put in queries in search bins. Here, you’ll be amazed to know there are many legal torrent websites that solely host reliable content. Just like Snowfl, Bitcq is also a torrent downloader web site that features a very clear and distraction-free interface. It’s a BitTorrent DHT search engine that makes use of DHT protocol for looking out nodes that take care of torrent distributing jobs. For cryptocurrency websites, I would need to see key phrases corresponding to crypto, coin, chain, block, finance, tech, bit, etc. However, as was the case with the area filter above, not all emails will necessarily comprise your set of key phrases. "Only Save One Email per Domain Name" - some domains / web sites have a few e-mails, one for customer service, one for advertising, one for returns and so on. This choice Data Scraping will save only one email as you would not need to contact the identical company many instances. Perhaps you could have your individual listing of websites that you've got created utilizing Scrapebox or any other kind of software program and also you want to parse them for contact details. Search engines like Google, Bing or Yahoo get virtually all their information from automated crawling bots. Search engine scraping is the method of harvesting URLs, descriptions, or different data from search engines like google and yahoo corresponding to Google, Bing or Yahoo. This is a specific form of screen scraping or web scraping dedicated to search engines like google and yahoo only. Since that point, it has been made clear that in case you have duplicate content - essentially, if you're copying another source - then you're going to really feel Google's wrath. Boost your SEO with our social media posters, Instagram Management Tool, Search Engine E-Mail Scraper, Yellow Pages scraper, product evaluation generator and make contact with kind posters. Some programmers who create scraper websites may buy a lately expired area name to reuse its SEO energy in Google. Whole businesses focus on understanding all[citation needed] expired domains and utilising them for their historic rating capability exist. We do not support the usage of any web site to download copyrighted content. We also encourage new BitTorrent users to discover the basics of P2P file sharing, its advantages, and the native laws associated to the identical.

To outline it in simple phrases, SERPs are principally responses given by search engines like google whenever you put in queries in search bins. Here, you’ll be amazed to know there are many legal torrent websites that solely host reliable content. Just like Snowfl, Bitcq is also a torrent downloader web site that features a very clear and distraction-free interface. It’s a BitTorrent DHT search engine that makes use of DHT protocol for looking out nodes that take care of torrent distributing jobs. For cryptocurrency websites, I would need to see key phrases corresponding to crypto, coin, chain, block, finance, tech, bit, etc. However, as was the case with the area filter above, not all emails will necessarily comprise your set of key phrases. "Only Save One Email per Domain Name" - some domains / web sites have a few e-mails, one for customer service, one for advertising, one for returns and so on. This choice Data Scraping will save only one email as you would not need to contact the identical company many instances. Perhaps you could have your individual listing of websites that you've got created utilizing Scrapebox or any other kind of software program and also you want to parse them for contact details. Search engines like Google, Bing or Yahoo get virtually all their information from automated crawling bots. Search engine scraping is the method of harvesting URLs, descriptions, or different data from search engines like google and yahoo corresponding to Google, Bing or Yahoo. This is a specific form of screen scraping or web scraping dedicated to search engines like google and yahoo only. Since that point, it has been made clear that in case you have duplicate content - essentially, if you're copying another source - then you're going to really feel Google's wrath. Boost your SEO with our social media posters, Instagram Management Tool, Search Engine E-Mail Scraper, Yellow Pages scraper, product evaluation generator and make contact with kind posters. Some programmers who create scraper websites may buy a lately expired area name to reuse its SEO energy in Google. Whole businesses focus on understanding all[citation needed] expired domains and utilising them for their historic rating capability exist. We do not support the usage of any web site to download copyrighted content. We also encourage new BitTorrent users to discover the basics of P2P file sharing, its advantages, and the native laws associated to the identical.

Buy CBD Online - CBD Oil, Gummies, Vapes & More - Just CBD Store https://t.co/UvK0e9O2c9 @JustCbd pic.twitter.com/DAneycZj7W

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

Now, Google appears to have heard the complaints and has launched a device to help. Many SERP scrapers like Zenserp also present their companies in image search scraping by which they collect pictures associated to the search question. As you scroll down, yow will discover torrent sites divided into completely different classes. The UI is neat and clean, and you won’t see any annoying ads coming out of nowhere. Using the search button at the prime left, you'll be able to search for the torrent you like and get related BitTorrent obtain. View our video tutorial exhibiting the Search Engine Scraper in motion. This feature is included with ScrapeBox, and is also appropriate with our Automator Plugin. Training new engines is pretty straightforward, many individuals are capable of train new engines simply by looking at how the 30 included search engines like google and yahoo are setup. We have a Tutorial Video or our assist employees may help you practice particular engines you need. Scraping is usually targeted at sure websites, for specfic data, e.g. for price comparison, so are coded fairly differently. For example, in case you are a jeweller who makes marriage ceremony rings, you could want to contact all of the jewelry stores and wedding gown outlets on the planet to supply them to inventory your wedding ceremony rings or collaborate with you. However, the specifics of how Instagram works are different to other sources. We ought to add some simple choices beneath Instagram drop down whether to search for users or hashtags on Instagram or both. We also needs to add an ability to login / add login details to an Instagram account underneath the last tab contained in the settings. It merely uses the metadata like names and sizes to record downloadable torrent recordsdata. Just like different torrenting sites talked about in this listing, Snowfl.com is a torrent aggregator that goes by way of various public torrent indexes whenever you search for a question on this web site. What units this web site aside from different sources is its minimal interface and evening mode choice to appease your eyes. You can use the search bar to find torrents from Torrentz2’s index of over 61 million torrents sourced from ninety+ torrent websites. This action needs to be carried out to be able to give the website scraper full writing permissions. Some scraper sites are created to earn cash by using advertising programs. This derogatory time period refers to web sites that don't have any redeeming worth besides to lure visitors to the web site for the only real function of clicking on advertisements. Even bash scripting can be used along with cURL as command line device to scrape a search engine. When growing a scraper for a search engine almost any programming language can be used but relying on performance necessities some languages will be favorable. Immediately after fee Paypal will direct you to the obtain file so now you can start using POF Username Search Desktop Software immediately. Boasting a 100% success rate and an easy to use API, this answer is nice for anybody who needs to be assured fast high-quality search engine knowledge. However, with prices starting at $50 for 50,000 Google searches using SERP API as your major supply of SERP data may be pricey when you need large volumes of data. First on our record is Scraper API because it gives the best performance for the bottom value versus everybody else on this list. Gain entry to chopping-edge search engine optimization and lead technology software program and niche-focused B2B databases. Google recently announced an enterprise anti-bot resolution referred to as, reCAPTCHA Enterprise. The service promises to stop scrapers, hackers and other software-based mostly assaults. Become a greater SEO with six superior search ideas that will help you find guest posting opportunities, uncover missed inside linking opportunities, unearth duplicate content material, and extra. However, if this is happening as I and lots of others suspect, then essentially Google is indexing the wrong websites, which is dangerous for customers and bad for the folks creating authentic content. This is attention-grabbing, given the results of the recent experiment. We may add an option to “Skip subdomain sites” as those are typically web 2.zero and contain a lot of spam. Inside each column name i.e. url, e mail, web site, tackle we should add a examine field so that a consumer can choose exactly what data to scrape. And in the first column we could have one checkbox to pick out all or choose none. The quality of IPs, methods of scraping, keywords requested and language/country requested can tremendously have an effect on the attainable most price. To scrape a search engine efficiently the 2 major components are time and quantity. In the past years search engines like google have tightened their detection techniques almost month by month making it increasingly tough to reliable scrape as the developers must experiment and adapt their code often. The script working in background requests for a search time period and creates directory (if not made previously) in the root listing of the script where all of the content of the related explicit search is saved. This script will be downloading the hypertext and hyperlink to that textual content and saving it to a .txt file inside the listing made by itself. This directory saves the textual content content as well as the pictures downloaded using the script. The BingScraper is python3 bundle having perform to extract the text and pictures content on search engine `bing.com`. Note that link-only answers are discouraged, SO solutions ought to be the tip-level of a search for a solution (vs. yet another stopover of references, which are inclined to get stale over time).